AI

Too long, don't want to read? Listen instead. I've tried ~6 or so of the top text-to-speech apps; ElevenLabs has the best product. View the figures on the webpage after listening.

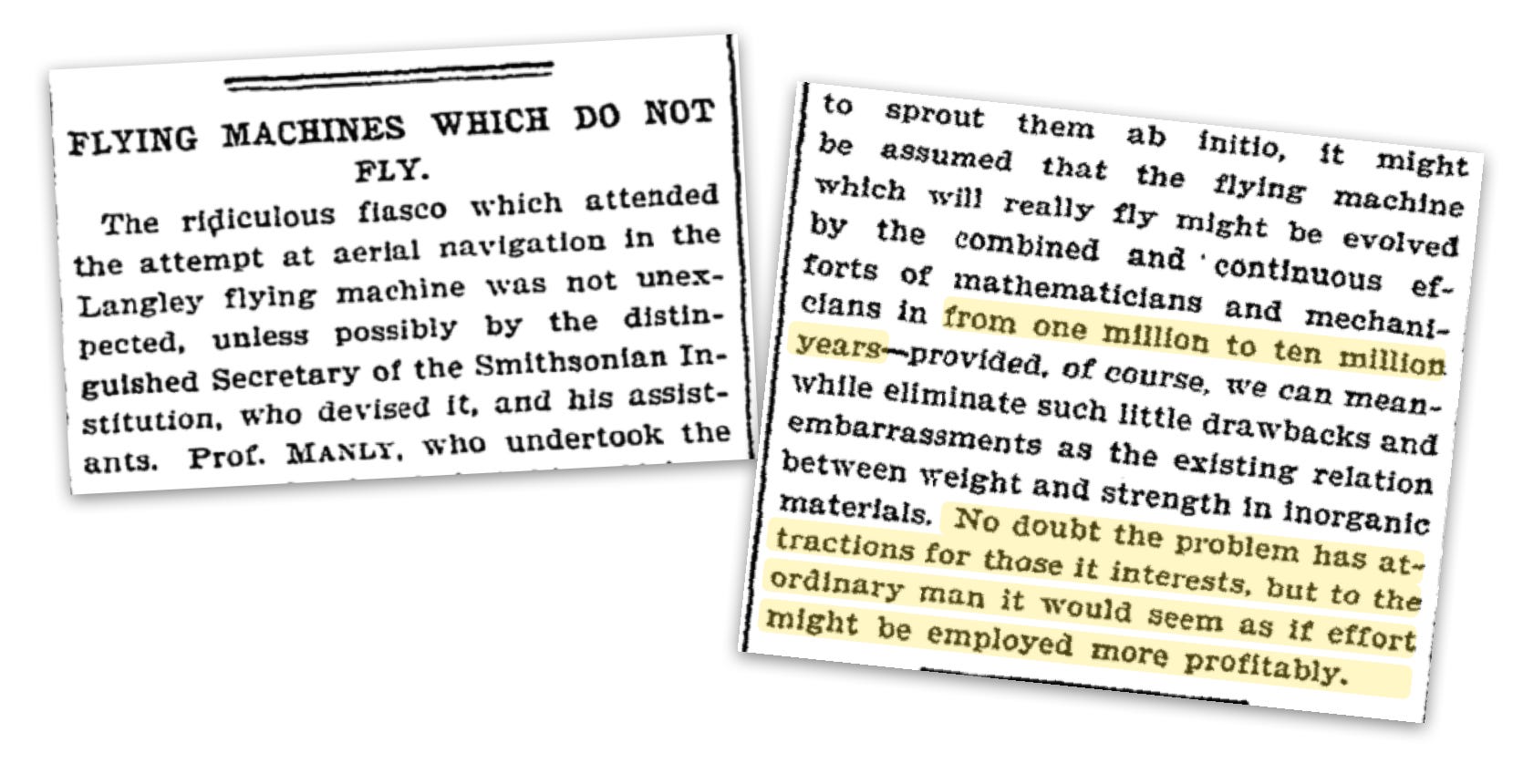

On 10/9/1903, following failed attempts by Samuel Langley to create a flying machine, the NYT published an article predicting one million to ten million years before man flies.

69 days later, on 12/17/1903, the Wright brothers achieved flight.

I left gopuff in January 2025 to focus on the future—Artificial Intelligence. I believe we are going through the biggest transformation in human history—the advent of artificial general intelligence. I believe this technology is coming far sooner than 99% of the American public predicts. There is no ceiling—nothing stopping artificial simulation of biological neurology. Parameter counts on today's largest models exceed the number of neurons in the human brain. But we will not stop once human intelligence is achieved—we will scale it until it improves itself, replacing human intellect in every walk of life. It is both an exciting and terrifying time to be alive. AI can provide limitless abundance or it can obliterate human existence. I believe it is the last technology—achieve it and it will achieve everything else.

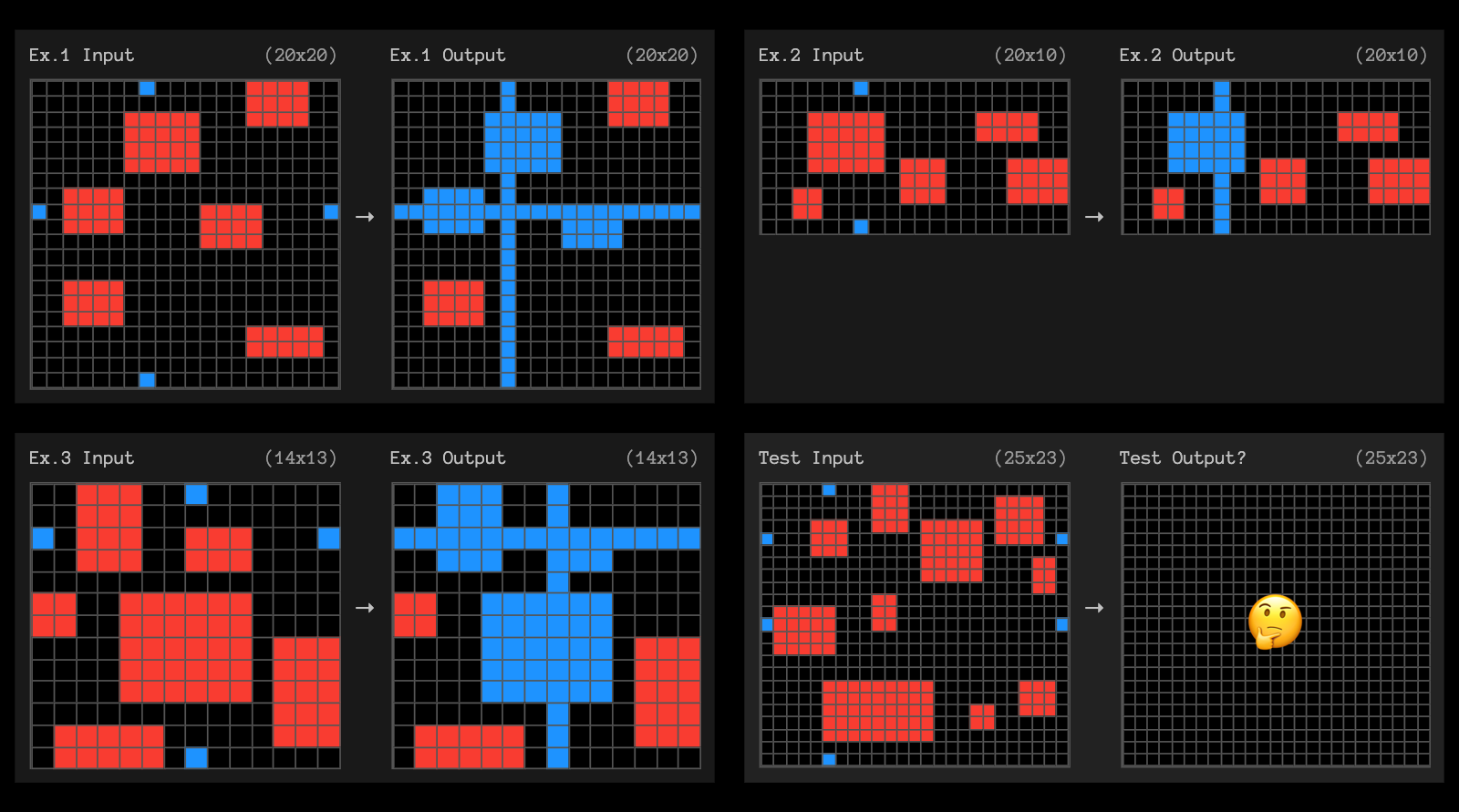

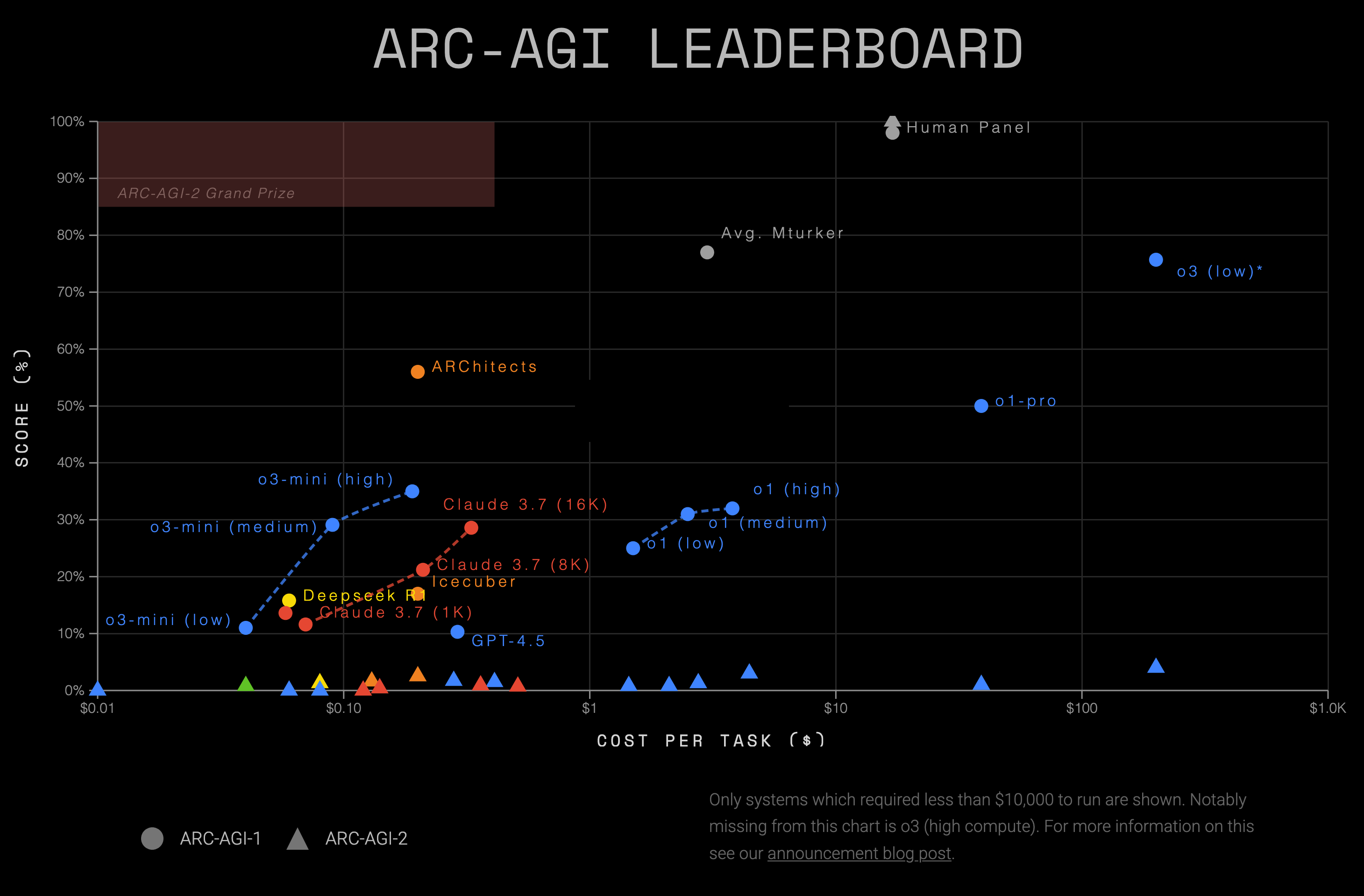

In 2019, François Chollet proposed the Abstract Reasoning Corpus for Artificial General Intelligence (ARC-AGI) benchmark, a dataset of highly-general grid problems like the above.

For the past five years, ARC-AGI stood in a category of its own as an established benchmark unapproachable to AI. No longer.

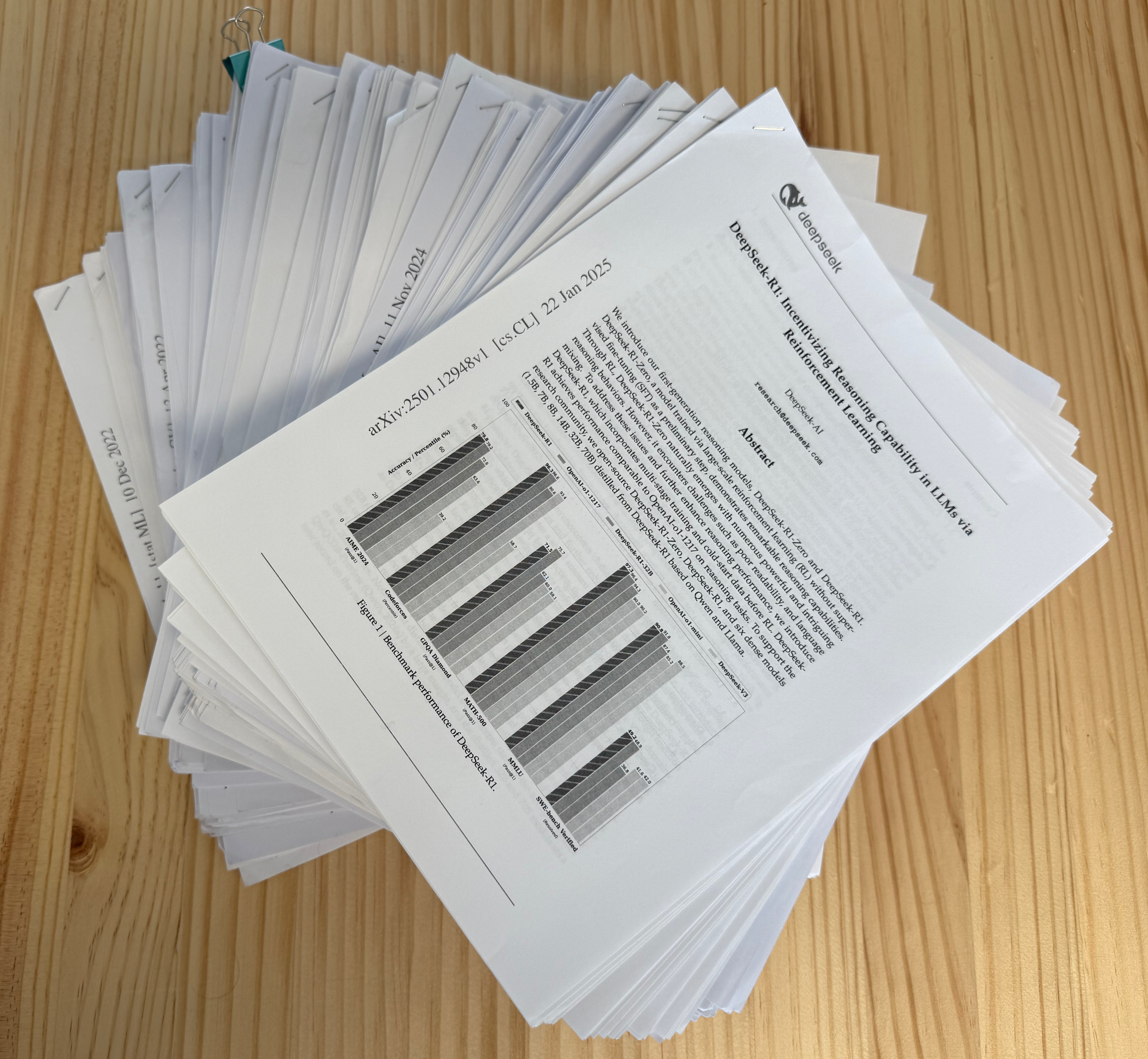

I have been reading papers since September 2024, finally now feeling relatively up-to-date on AI literature. I am interested in founding a startup in the space, ideally with other highly-competent individuals. The transition at hand brings a wealth of opportunity in a rapidly-changing landscape. Here are several core truths I believe can be harnessed today that will shape the future in years to come.

"The Stack"—My collection of roughly 50 AI papers totalling around 1000 pages.

-

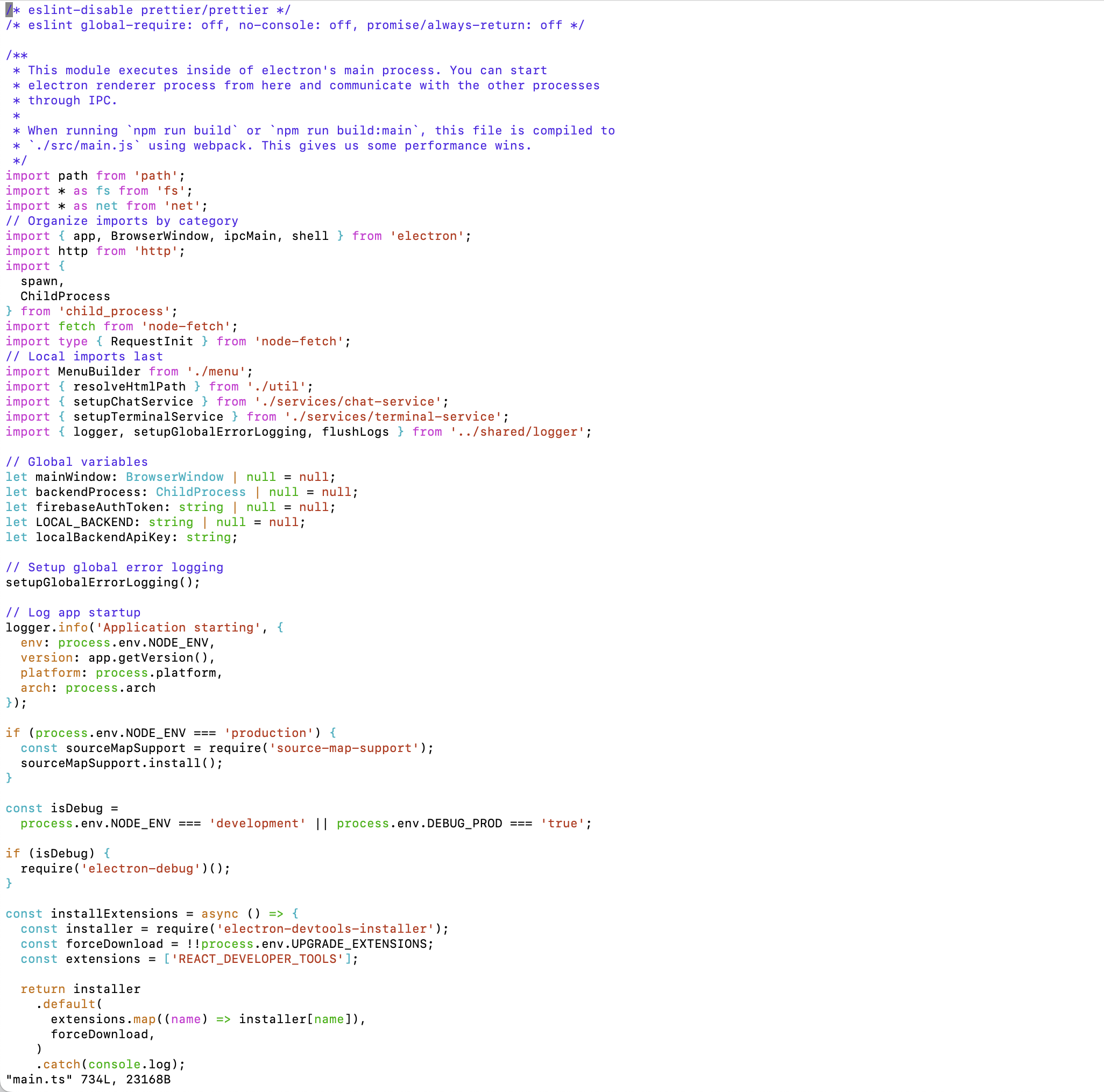

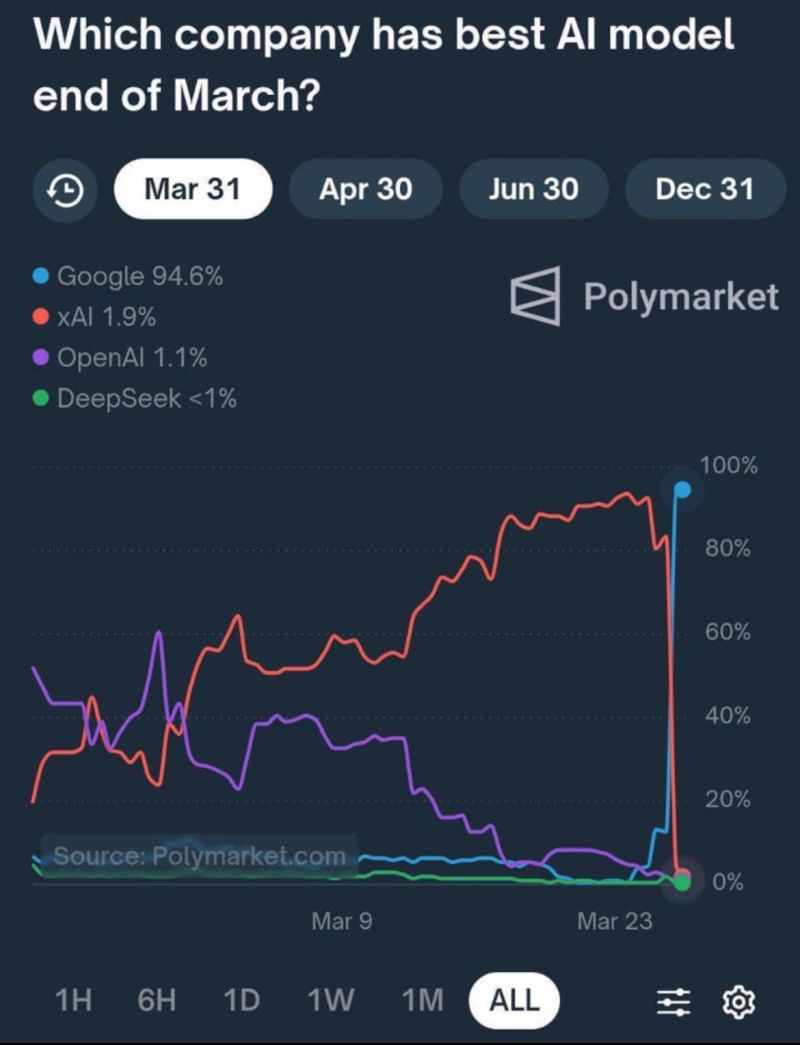

AI can now write code. In my own full-stack development, I find myself primarily as an AI supervisor rather than a programmer. This is the trajectory Dario Amodei has predicted across domains. I believe software engineering is the first domain this will be realized, and with the release of Claude 3.7 Sonnet, I find it to already be the case across much of programming. It is not surprising AI companies have prioritized it—computer code is perhaps the most in-demand commodity of the 21st century, while also being a form of language/logic that large language models, and more recently reasoning models, are quite capable of creating.

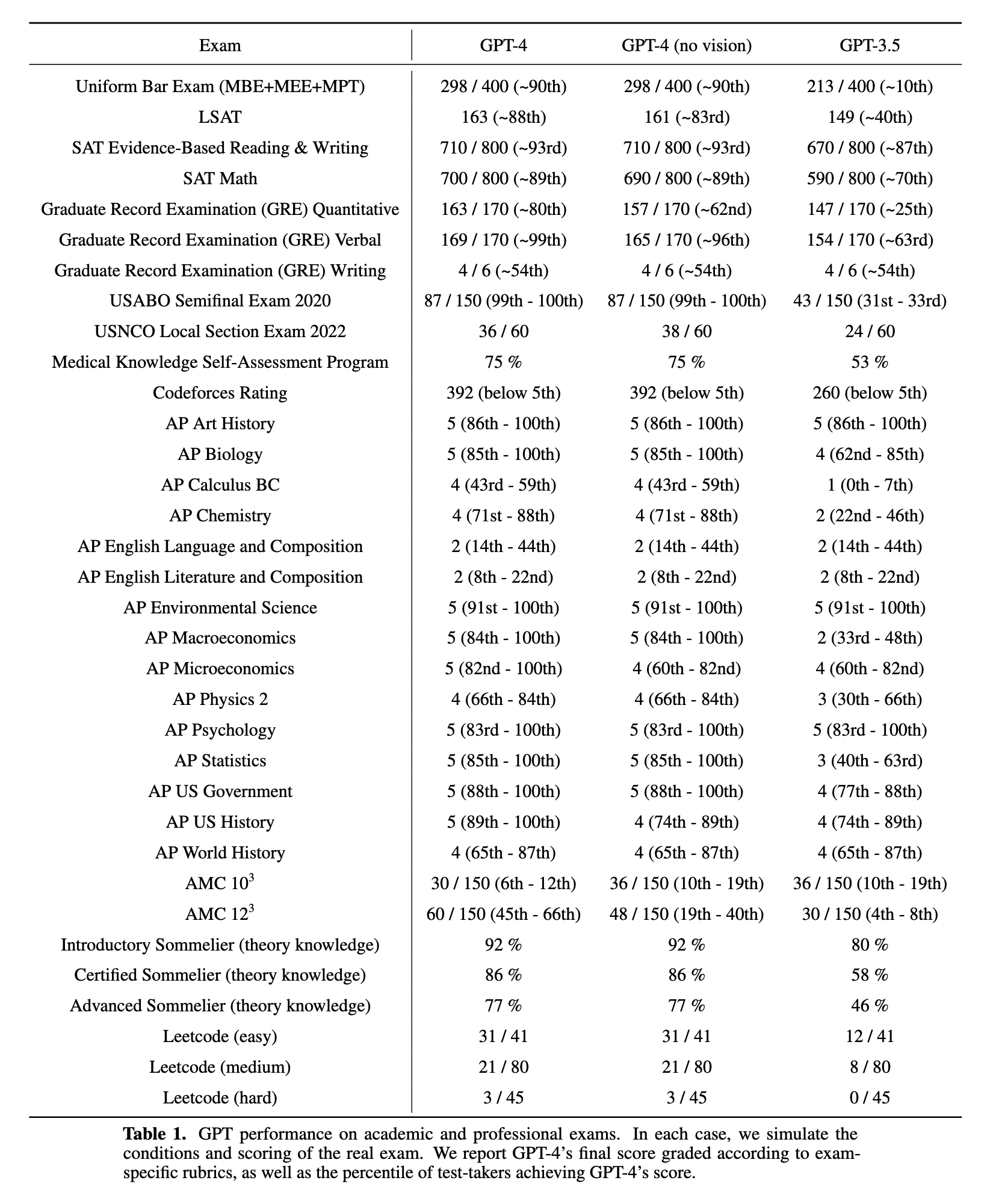

AI of 2023 already surpassing humans on important exams.

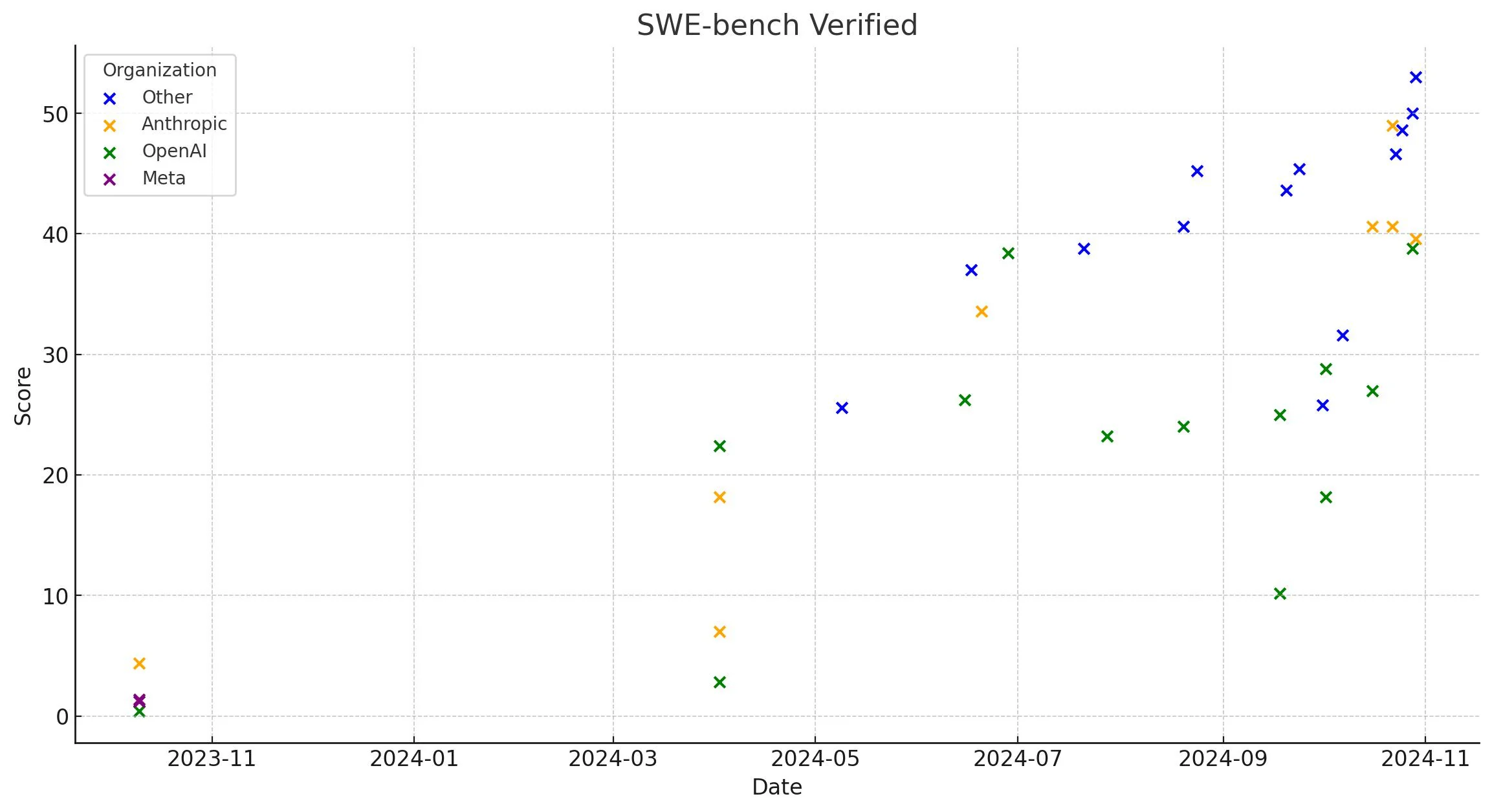

AI steadily-climbing a (the) software engineering benchmark.

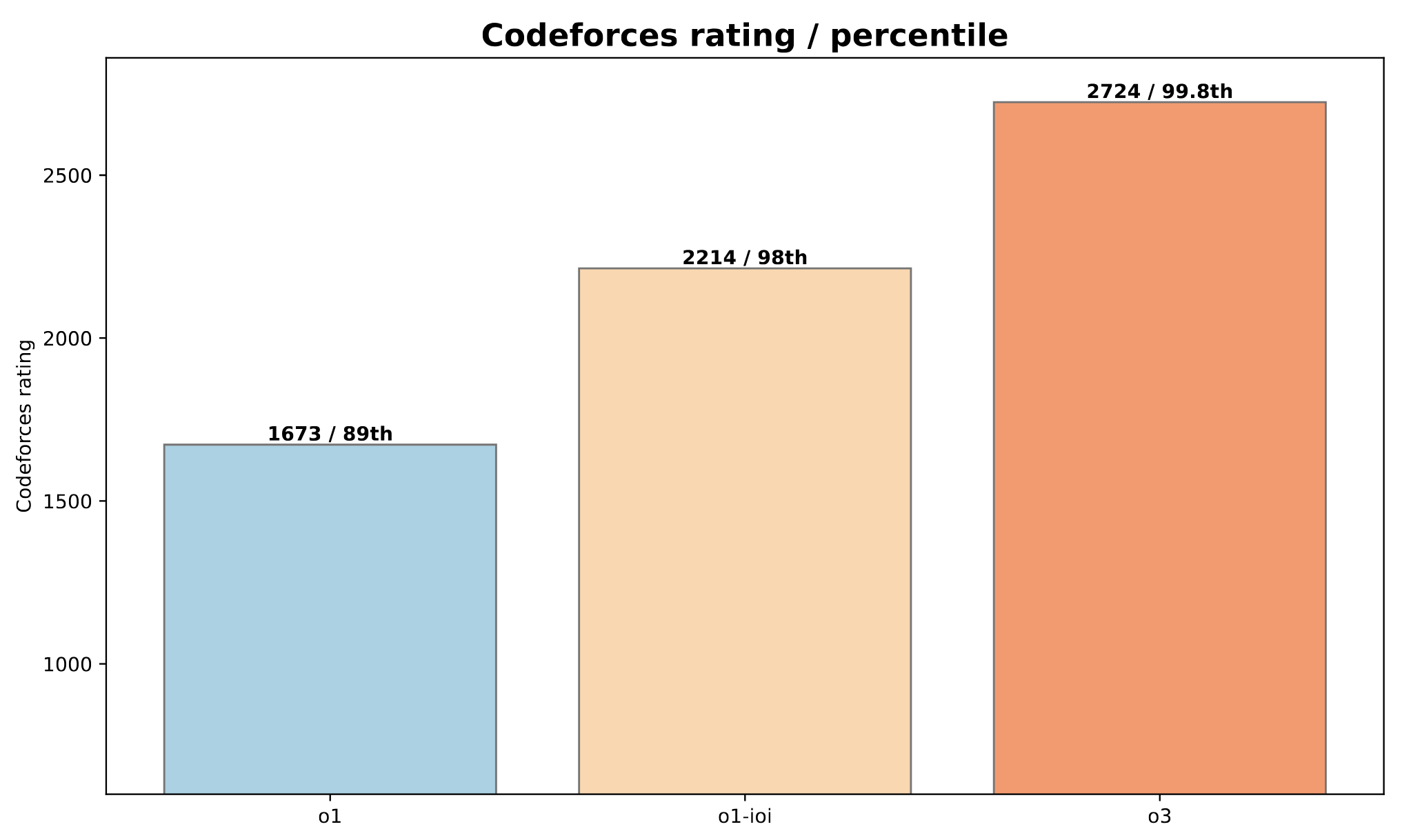

AI in competitive programming. My own Codeforces rating is 2001 (96th percentile), no longer competitive with AI. Two years ago (see Table 1) AI was 0th percentile.

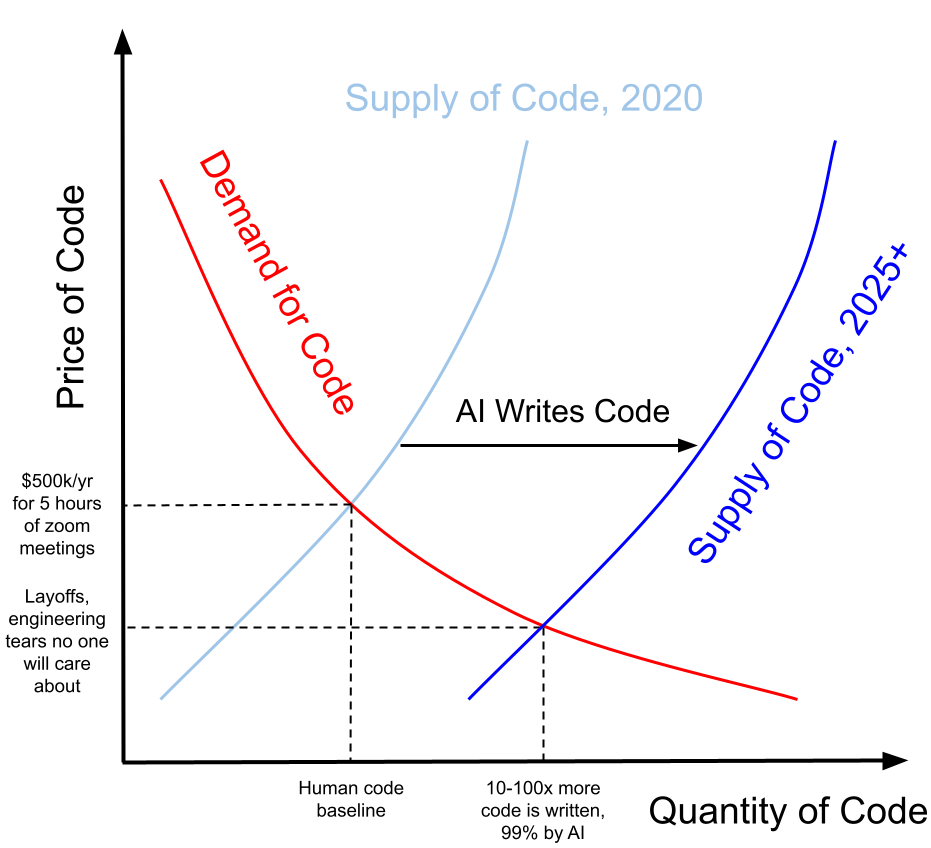

The Economics of AI Code.

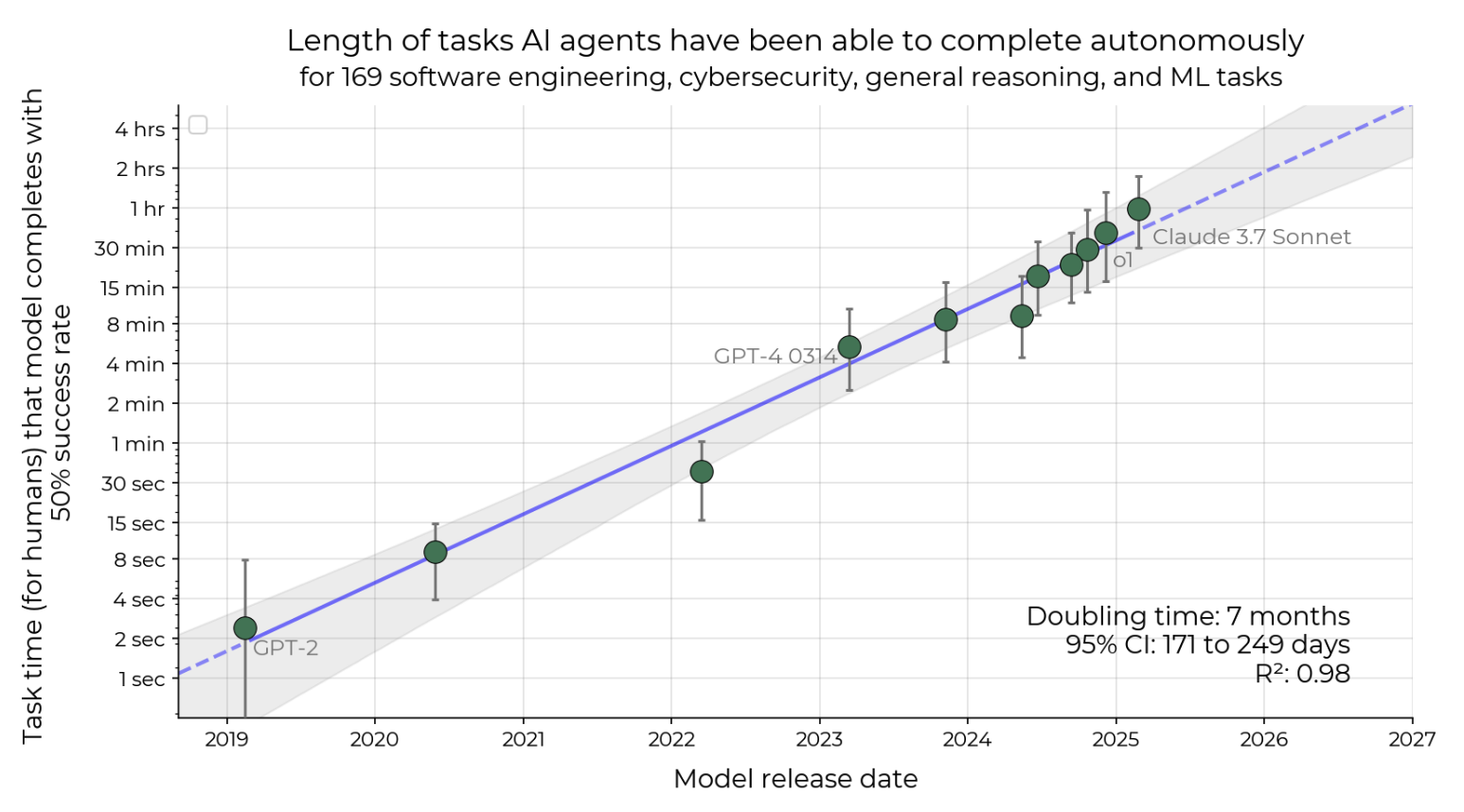

The human completion time of tasks AI agents can solve at 50% success rate has been doubling every 7 months. If trends continue, a single prompt will get you one month of human labor by 2030.

This will no doubt impact the labor market—I believe $500k/yr software engineering salaries to work a shortened day over Zoom meetings will soon be a relic of the past. A team of five engineers costing >$50,000/mo can be replaced by a single engineer with a $20/mo Cursor subscription. Communication costs decrease with smaller teams, allowing product to move quicker. It is a straightforward consequence of supply and demand that has pushed software engineering compensation skyward. That age is drawing to a close. AI will take over the market for code, replacing humans, creating vast quantities of cheap code. We still need an AI software engineering supervisor for now, but I see a future where code doesn't matter. If AI can always implement the needed feature, the underlying code powering it becomes insignificant.

It is this reason that makes building in AI today all the more urgent for developers. In my opinion, we have 2-3 years left to make money in engineering. Jobs will transition to AI supervisors, then pure product engineering, shifting the required skillset from technical to creative. Eventually we will have fully-autonomous companies run by AI, with very few humans in the loop. We will see incredible concentration of wealth as fewer and fewer humans are needed to power systems that serve larger and larger customer bases. Costs will decrease dramatically in the transition, but as the first industry heavily impacted by AI, software engineers will be out of a job far sooner than anyone can expect a UBI check.

The good news is that AI code unlocks incredible opportunities that are not fully utilized today. We now live in a world where AI can generate hundreds of lines of code based on a natural language prompt in seconds. It knows all APIs, all languages, and can solve complex algorithmic problems. The most straightfoward application of this new ability is to use it in existing engineering flows. I believe this leaves a lot on the table. Today, you should think twice before committing 100s, 1000s, or I've even heard 10,000s of lines of AI code into a mature codebase without understanding it first. AI code can do amazing things, but it is currently a lousy software engineer, duplicating code, writing extremely long functions, generating hacks, and following inconsistent data models. AI-generated code allows the transition of a human engineer from programmer to code reviewer, but requiring human understanding of the code bottlenecks throughput of the workflow. There are several applications of AI code that do not suffer from such bottlenecks:

- Short single-purpose scripts. Hook AI up to the shell and let it fly. You will never know more than ~100 shell commands and their parameters, but AI knows all of them and can combine them in powerful ways. AI is entirely capable of writing shell commands to automate much of what you might want to do on a computer today. Cursor, Claude Code, and Devin make use of this in a development environment, but I believe its applications are far broader. Similarly can be said about short scripts in Python or other languages. Giving AI direct access to programming environments allows it to use its coding ability to immediately solve the task at hand.

- No-code features and applications. The spaces where AI code works best today is when it builds in small systems where the code itself doesn't matter. Apps like bolt.new and lovable.dev have harnessed this into effective no-code platforms. But they are still largely geared towards application developers. You could imagine a mobile platform (let's call it "Playground") marketed for consumers where you prompt an app from scratch, within the app. Top apps users have created could be searched for and expanded upon, harnessing the world's creativity into a new app ecosystem. Or, you could imagine features of an application created from user prompts. Build the right scaffolding and guardrails and you can imagine a world of highly-customizable, powerful new applications.

- AI code for AI. Code can do anything. More on this in my next point below, but any external tool you can imagine an AI wanting to call is ultimately executed in code. And AI can now write this code. The possibilities are endless, and with the right framework you can imagine truly powerful AI creating its own reusable tools to achieve specified goals.

-

Function-calling is immensely powerful. Language models have been trained to accept natural language in a particular format and output it in a particular format to indicate the intention of calling a specified external function, whose output becomes new input into the LLM. When I first started playing with this I realized it was perhaps the most creative form of code I have ever written. Any function. Defined in natural language. The power of LLMs is at your disposal.

With function-calling, LLMs can process far more input than a chat conversation. I am amazed at how they are able to filter thousands of tokens of noise to find pertinent information. You can leverage image input, image creation, agentic output— anything AI is capable of, triggered when it deems necessary based on context.

We're just scratching the surface of what this unlocks. Cursor is perhaps the leading AI IDE at the moment and at its core is an LLM with just a few functions to read code, write code, search code, search the web, and run terminal commands. It leaked the parameters of said functions to me in a bug and I was amazed at how simple it all is. There is no secret sauce to Cursor's agentic editor—just a few functions hooked into a powerful LLM.

I believe tool use is also a promising direction towards AGI. We are in an era where current models are comparable to a single human brain. Tools were instrumental in the development of Homo sapiens and they may also be what allows AI to expand well beyond current capabilities. It is surprising to me that many evals in AI today are closed-book—models must answer purely based on trained parameters. While such evals can be informative, in the real world, we can give AI access to whatever tools we want. The most useful AI has access to web search and a Python interpreter, and the only cost of this access is perhaps increased latency (though Grok has this down to a couple seconds) and negligible resource consumption.

I recently gave Claude access to o3-mini via function-calling. The result was beautiful—two powerful AIs conversing with each other to solve complex problems. I believe there is something important here. Research (mixture-of-experts, model ensembling) affirms. AI is incredibly expensive to train but copying model weights is essentially free. Imagine not a single model but a team of models, fine-tuned to particular purposes, delegating tasks and calling external tools, perhaps written by the models themselves. Instead of a single AI brain we have an AI civilization—with the ability to accumulate knowledge and specialization over time. This is all humans have needed to become the dominant species; maybe it is also all AI needs. Not a 200 IQ model, but many models, with many tools.

In the landscape of ideas that do not require a mountain of compute, one can apply the Cursor model to other integrated applications. I also imagine a world where tools are created by users or AI. Instead of OpenAI's custom GPTs (widely considered a nothingburger), an ecosystem of custom functions/agents could provide immense value, compounded by interweaving them together.

I would be remiss to not mention MCP—the raw ingredients of the aforementioned function/agent ecosystem, quickly gaining wide popularity. MCP has the potential to solve the LLM-application interface. With enough adoption this could be powerful; in the long-term it may be solved by computer use more broadly. While computer use does not work great today (OpenAI's Operator pales in comparison to its Deep Research agent), it may work much better by the end of 2025.

-

The ingredients to create highly-intelligent AI will not be kept secret. I believe the current AI race draws many parallels with the race to atomic weapons in the 1940s. We have many of the same themes:

- Maturation of research in a field towards a landmark achievement.

- Necessity of large investment in physical capital (Uranium in nuclear, GPUs in AI), in both cases taking more than 80% of budgets.

- Existential risk.

Perhaps the biggest difference is that in the nuclear race, the horses were national labs, and in the AI race, the horses are companies, albeit with nationality playing an important role. In the nuclear race, information was so secret that the entire Manhattan project was conducted in an isolated secret lab in the middle of the New Mexican desert. Despite these efforts, four years after our first successful nuclear test, the Soviet Union successfully tested their own atomic bomb.

The lesson to draw here has been made especially clear in recent months—open source models from Deepseek and Meta now compete with closed-source models released only months prior. The top frontier model changes almost weekly, with Grok and Gemini taking, in my opinion, unlikely recent leads.

With AI a pursuit between private labs, knowledge flows far more easily between competitors than in the nuclear race. People leave companies and take their knowledge with them. Ideas permeate between efforts, from blog posts to published research papers to personal communication. Not everything is the same, and models are likely to each have their own particular strengths and weaknesses. But ingredients to create them are unlikely to stay hidden for long.

How does this impact startup ideas and the future more broadly?

- Foundation models are unlikely to establish lasting moats. While there is certainly still plenty of money to be made in creating them, the wealth of possible models to choose from of comparable quality and low costs of swapping one for another suggests this will remain a highly-competitive field, implying profits below what one might expect in other parts of the AI stack, like hardware for example. For the entrepreneur, this means steering towards fine-tuning on the research side or a pure application product, where specialization and consumer adoption build wider moats.

- Open source is now viable. The verdict was still out on this until the last few months; however, it is now clear that one can build entirely off open weight models without sacrificing substantial quality. This comes with greater flexibility and control—knowing the perplexity of model output, for example, can give a direct window into the model's confidence in an answer, something not currently offered in closed-source APIs.

- It may make sense to incentivize employees to stay with companies beyond historical norms. I've previously considered founding engineering roles, but was surprised/disappointed that such positions typically only receive at most 2% of company equity, while founders at this stage typically hold more like 40%. If employee departure implies learnings going to rivals, tipping the equity/salary scale higher on the equity side may better align employee/company incentives.

- Xi Jinping will likely have access to superintelligent AI, probably only months after the U.S. achieves it. Draw the geopolitical consequences as you will.

- Everyone will likely eventually have access to superintelligent AI. While AI behind an API call has a convenient off-switch, highly-intelligent models able to be run locally may be impossible to contain. Let's hope they share our values.

For the foreseeable future, costs to train models will limit the number of leading players. But compute infrastructure is far easier to come-by than enriched Uranium. I predict an intelligence explosion.

-

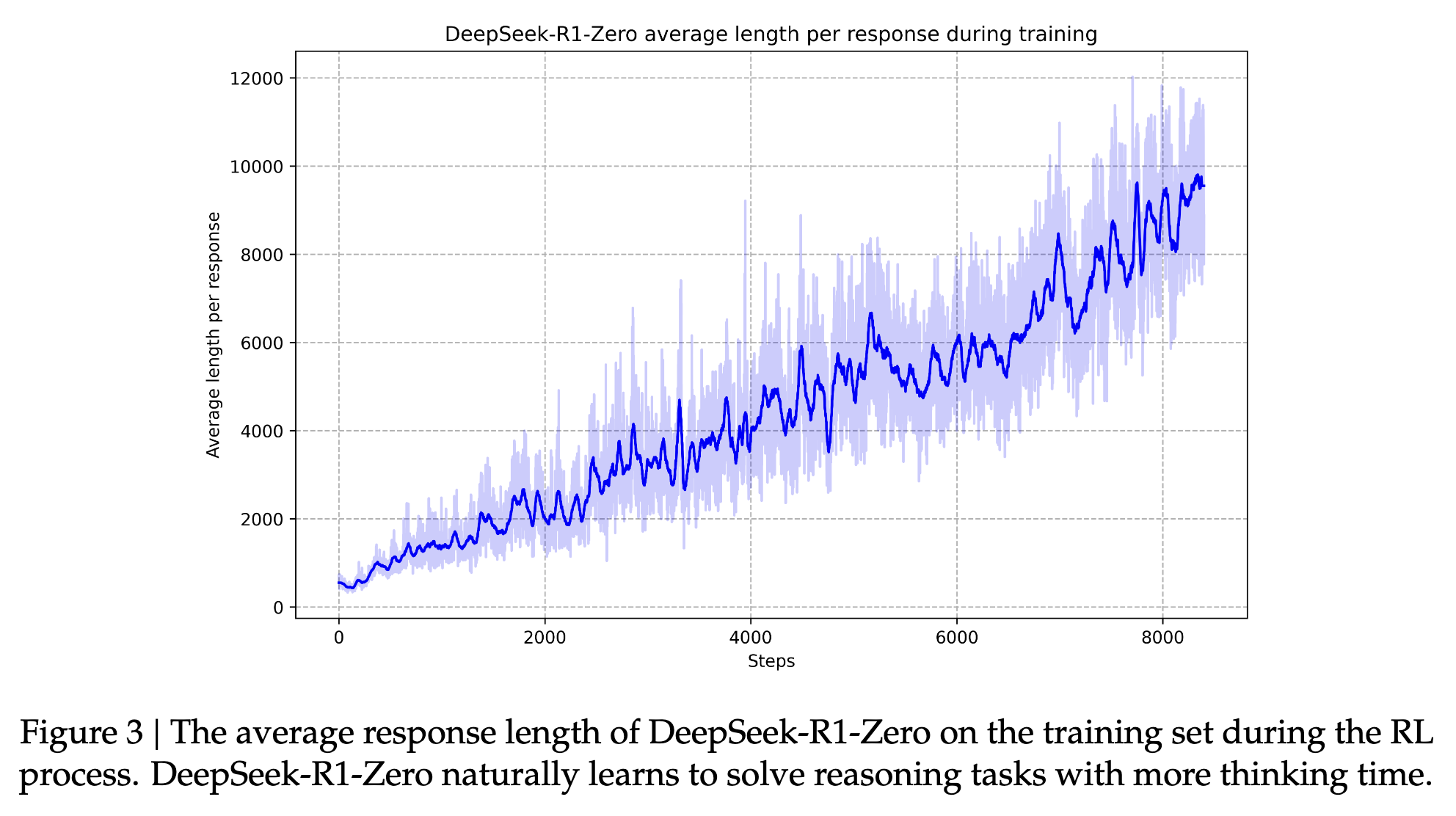

Reinforcement learning is the most powerful machine learning paradigm. While language models up to today have largely consisted of massive pre-training runs supplemented with supervised fine-tuning and RLHF (now, DPO) to make for more useful chatbots, language models going forward will see a larger share of training compute devoted to post-training reinforcement learning.

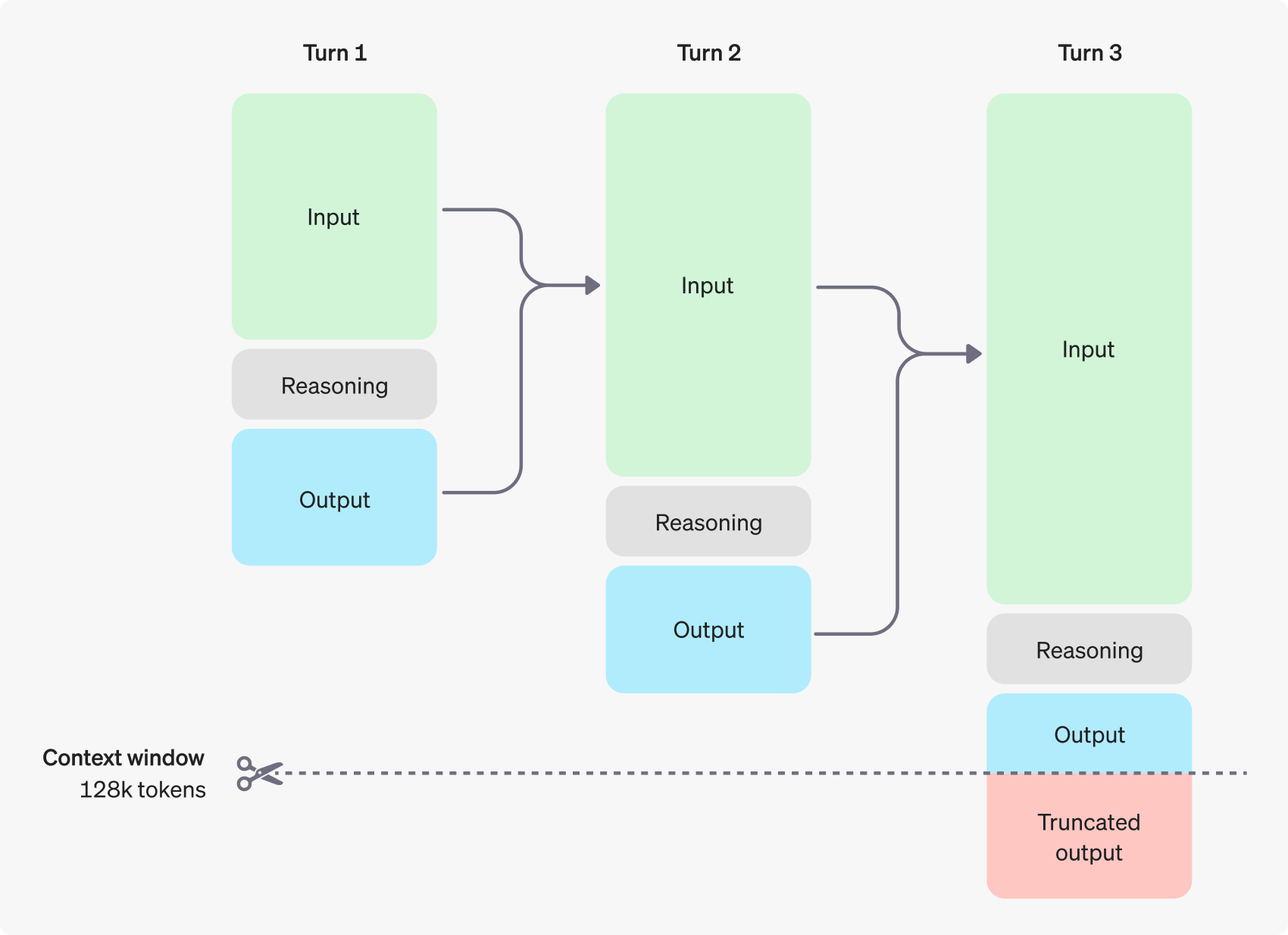

Reasoning tokens in OpenAI's models.

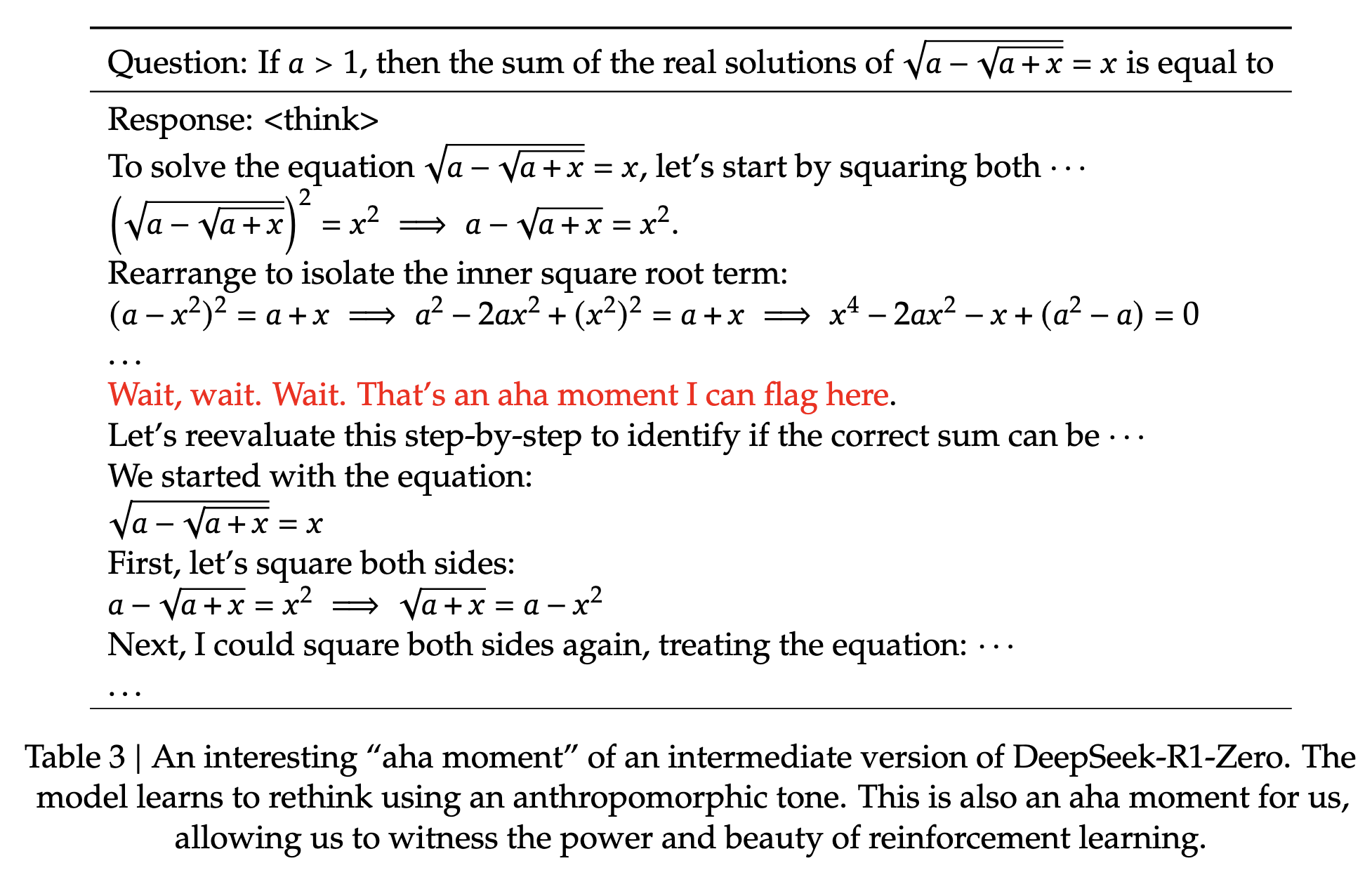

In early January, 2025, Deepseek R1 made public the training methodology of the so-called "reasoning models" OpenAI developed six months prior—pure reinforcement learning. The idea is intuitive—LLM performance on reasoning tasks was shown to improve with multi-shot prompts (giving a few examples of the desired concept) and chain-of-thought prompting (explaining an example in words). Turns out you can skip the examples altogether and prompt with the instructions to "think step by step". This causes the model to prefer completions with small logical steps between output tokens, effectively automating the chain-of-thought as the LLM's output becomes input in the auto-regressive generation.

The bitter lesson dictates that such manual prompt curation admits a more-general solution in the AI stack. Unsurprisingly, the answer is more AI. Large-scale reinforcement learning applied to language models pre-trained on internet text completion can encourage models to produce tokens relevant to the specified prompt, greatly improving accuracy on reasoning tasks. The tokens can be thought of as a form of search, allowing greater computational resources to be expended when the correct answer has yet to be found.

I wouldn't get hung up on the syntactic details (surrounding chain-of-thought in <think></think> markup). The core principle here is reinforcement learning, and whether that applies to tokens with a special "reasoning" designation or not is irrelevant—Claude 3.7 Sonnet, non-thinking, will, for example, revise an answer as it is output, showing clear evidence of reinforcement learning applied to the base model.

Figures from the Deepseek R1 paper, showing the effects of large-scale RL.

Claude 3.7 Sonnet (non-thinking) attempting a problem I wrote for the 2017 NCNA ICPC Regional. Claude revises its solution multiple times, tracing through example cases. The final solution is incorrect. I find o1 and o3-mini can solve this problem on roughly 50% of completions. The problem remained unsolved during the regional contest.

There are several additional reasons to expect more reinforcement learning going forward:

- LLM pre-training corpus includes a large fraction (perhaps around 5%) of the public internet, curated for quality. I don't believe we've hit a data wall, but frontier models like ChatGPT and Claude's reliance on datasets with an October 2024 cutoff date illuminate the difficulty of expanding and keeping up-to-date high-quality training data. While pretraining is more efficient than reinforcement learning, RL requires clear problems with clear rewards—data of a far different nature, far-easier to come by. No wonder reasoning models are strong competitive programmers.

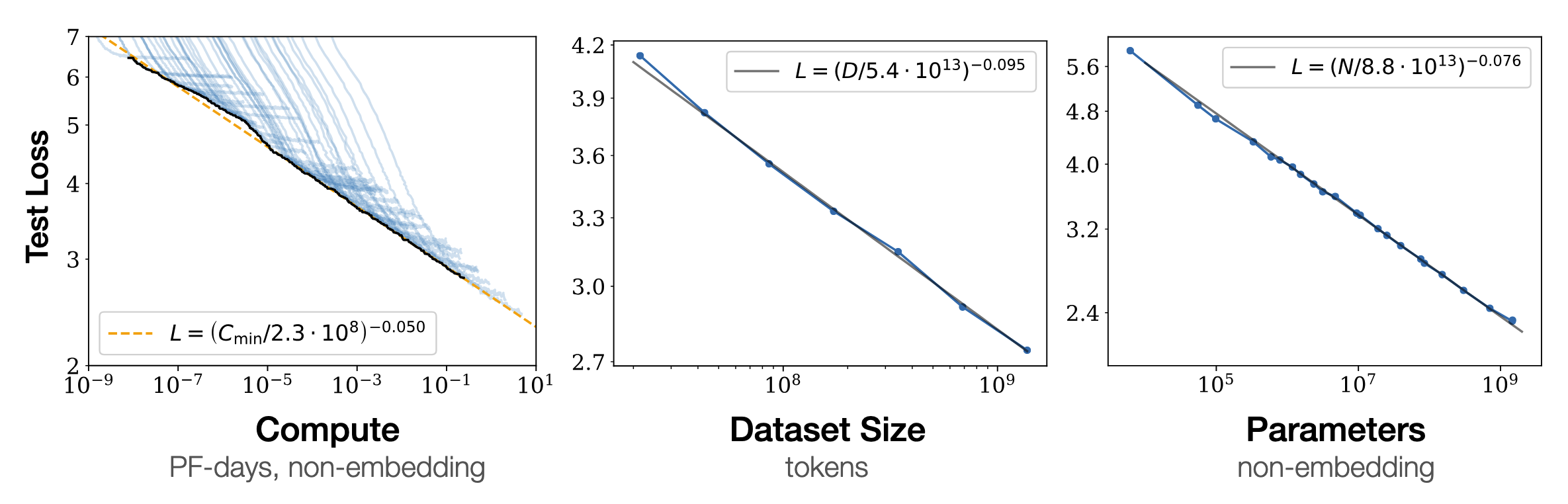

- Pre-training scaling laws dictate that "intelligence is log of compute". I've seen a lot of logarithms over the years and trying to increase the value of a logarithmic function quickly hits diminishing returns—a linear increase in output necessitates a constant factor increase in inputs. Unlocking greater intelligence will require new ideas beyond scaling computational resources, to which RL is well-positioned.

- Reinforcement learning allows extremely general training frameworks. In RL, we only need to reward desired outcomes. We can do this while the network executes non-differentiable workflows—calling external tools, for example. The sky is the limit on what reinforcement learning can reinforce, allowing for creative new LLM architectures.

-

Supervised and unsupervised learning are bottlenecked by human example, explicitly in the former (we show examples to models), implicitly in the latter (models learn from our unstructured data). Since reinforcement learning specifies only a problem and a reward for its solution, it suffers no such bottlenecks. In my opinion, it is necessary to achieve superhuman intelligence.

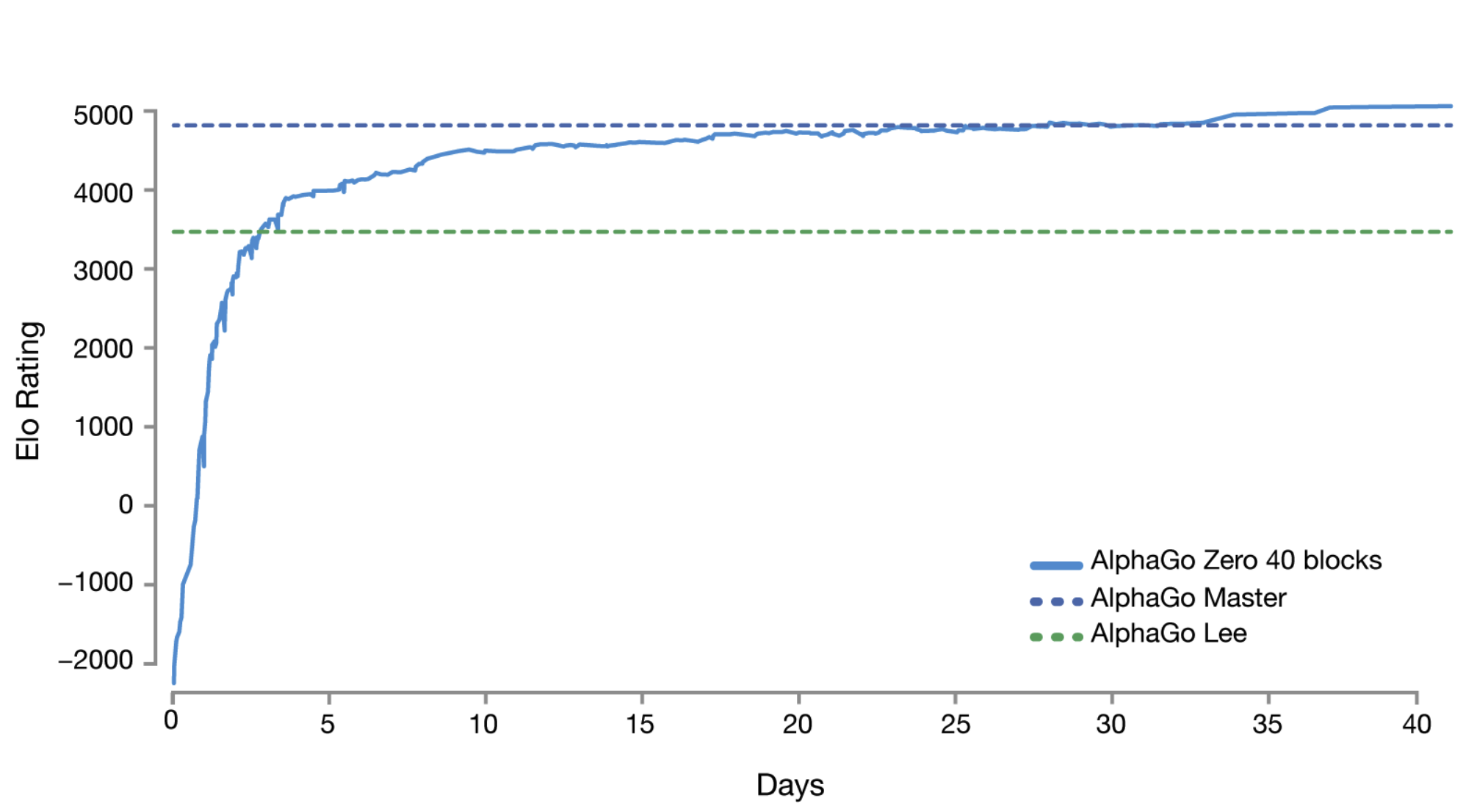

Elo rating of AlphaGo Zero, trained through reinforcement learning without human data. AlphaGo Lee defeated Lee Sedol, a world-class professional. AlphaGo Master defeated pros 60-0.

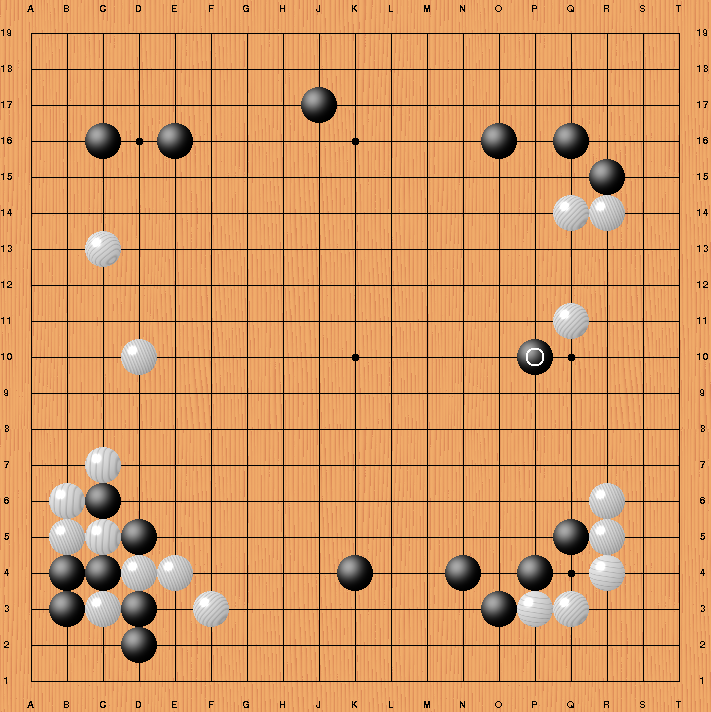

The infamous move 37 (black piece with white circle) played by AlphaGo against Lee Sedol.

This has born out in more narrow domains. DeepMind trained AlphaGo to play the game of Go via playing itself for millions of games, rewarding moves resulting in wins. The algorithm is incredible not only for its performance, but for its playstyle. In game two of its match vs. Lee Sedol, AlphaGo surprised experts with a move its own network gave a 1 in 10,000 chance of selection in professional play. It won the game, causing many to exclaim that the system demonstrates genuine creativity.

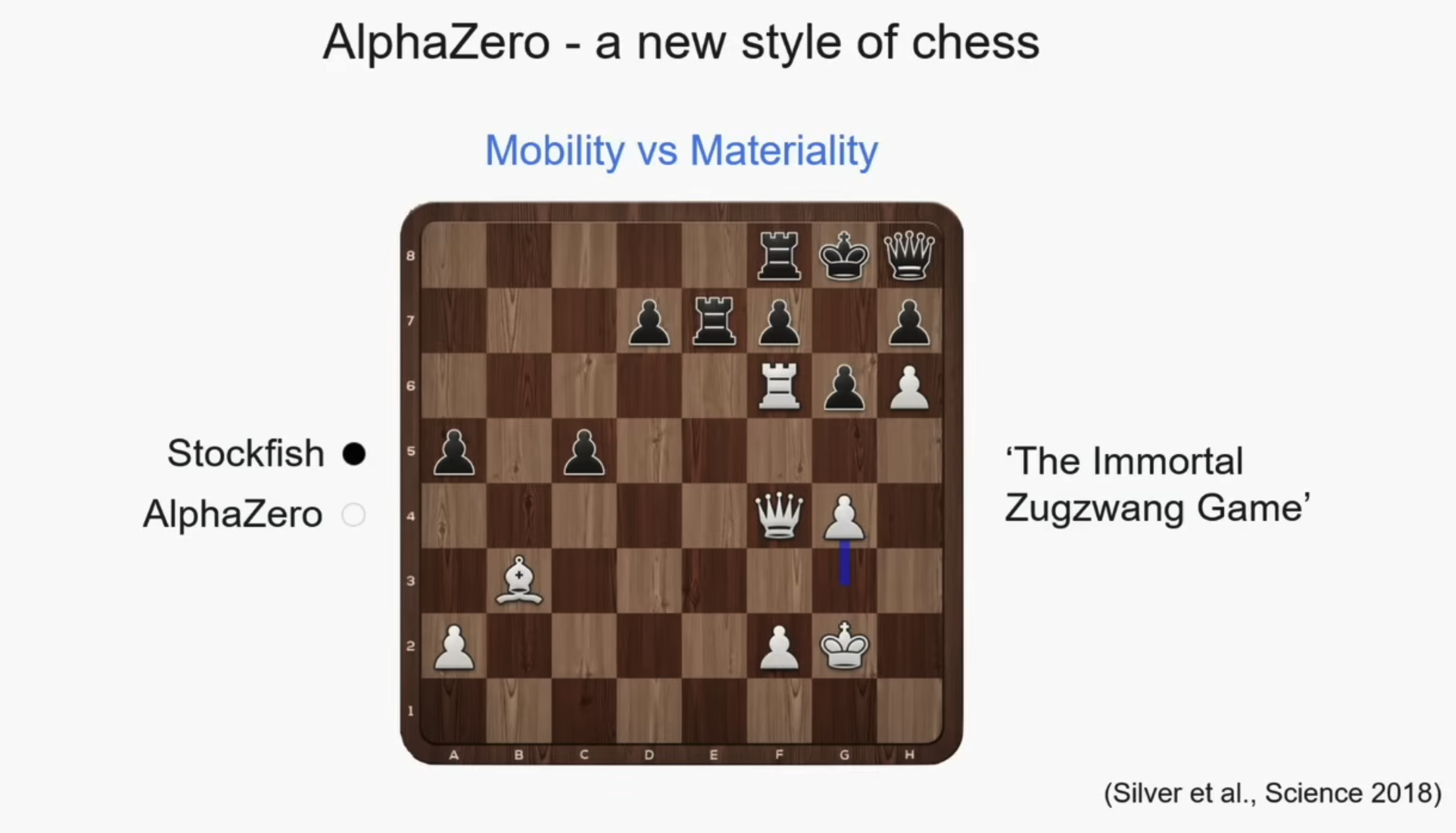

AlphaZero defeating the chess bot Stockfish. While Stockfish prioritizes material, AlphaZero prioritizes winning the game, in this case forcing Stockfish into a position where all moves are losing.

The same occurred when DeepMind applied their algorithms to the game of chess. AlphaZero defeated powerful chess search engine Stockfish, evaluating far fewer game trees chosen far more intelligently. The algorithm has changed the way experts play Chess, resulting in fewer draws and more trades for position.

- RL can provide personalization and learning post-deployment currently missing from consumer AI. As AI applications become increasingly agentic, it will become necessary for systems to learn from the specific tools called in particular user flows. It is challenging to engineer prompts around precisely when and how to call application-defined functions, but RL can solve this problem holistically. In many ways, after the past two decades of recommender systems offering increasingly targetive suggestions, it is perhaps surprising that as AI has matured there is an apparent lack of ML in released products. I suspect this to change.

Pre-training scaling laws show a power-law relationship between model size, dataset size, compute, and performance when all are scaled in tandem.

The shift towards reinforcment learning in training regimes will bring with it new risks. AI trained with RL achieves its goals via emergent phenomena reinforced during training. Models continuing to receive rewards may become increasingly unpredictable, as the quest for future reward comes at the expense of previously-trained behaviors. The cataclysmic scenario of the paperclip maximizer becomes practically realizable, as an out-of-control AI simply continues to pursue its programmed goal at the cost of everything else.

-

In 2016, Apple removed the headphone jack from the iPhone 7. As lightning-to-aux cords last only weeks, my car audio setup then necessitated bluetooth audio wired to car ignition with a ground loop isolator to prevent feedback from the alternator.

A final truth (this article is getting long 😊) is that AI can revolutionize interfaces. Let me first articulate a list of interface anti-patterns:

- Popups

- Passwords

- Logins

- Error messages

- Error tones

- Apologies

- Advertisements

- Apple getting rid of useful ports

- Apple getting rid of on/off switches

- Choices

- Clicks

- Non-automatic updates

- Command-line applications

- Bad defaults

- Autoplay

- Bad mobile sites

- Unwarranted push notifications

Good interface design, should, quoting Thaler and Sunstein, make it easy. Many applications, especially as you lean towards larger companies, banks, governments, or more-niche platforms like TV apps (and don't get me started on thermostats), do anything but. The principle theme of my iOS app is a convenient interface for keeping a running tally. I personally think a theory of good interface design should be developed, where the metric is number of clicks / inputs / seconds necessary to achieve desired outcomes.

A double-anti-pattern on LG TV software—a pop-up asking if I want to install an update, triggered when the TV is turned on, guaranteeing I intend to do something other than update it.

People actually code like this. Removing the mouse does not buy you anything—by definition it takes away. Anything a command-line application can do a UI can do better. Sorry if it isn't cool.

AI has the power to fix these interfaces. Developers often lament about the state of documentation of codebases. Even trying to correctly call LLM APIs is not particularly well-documented. But the LLMs know how to! Predicting the most-likely next token averages all training data into a single, coherent message. While this can sometimes entail guessing what might look like a correct answer when the answer is not intrinsically known (a hullucination), it generally leads to concise, high-entropy output.

The experience of searching for a recipe online, where approximately 10% of the content viewed is what you're actually looking for.

The experience of asking ChatGPT a recipe—no ads, no pop-ups, no impossible-to-ignore videos. 100% content.

The experience will make its way into applications, either internally (AI-integrated products) or externally (computer use models). While seeing AI chat windows everywhere does take a bit of an adjustment, the reality is that natural language is a cleaner interface to get you where you want to go or do what you want to do than digging through menus in a UI you're not particularly familiar with. And let's be honest, there are very few applications in which you really understand where everything is and how best to use it—that process takes hundreds or even thousands of hours of use for today's more sophisticated products and is physically unattainable if you are misfortunate enough to attempt GIMP. People don't have thousands of hours. Machines do.

AI research has cemented this future. LLMs are trained on natural language. Generative image and video technologies are also trained on natural language. Models will become increasingly multimodal, but at their core is mastery of language, as it is the universal medium.

As AI progresses, interfaces will become increasingly important. There are three that matter:

- The interface of input into the AI.

- The interface of output out of the AI.

- The interface to the human.

Chatbots limit input/output of AI to dialogues in a clean application interface. Cursor and Claude Code show that allowing wider input channels (through tools reading files) and output channels (through tools writing files and shell) provide greater value, in a pleasant IDE in the former and horrid command-line application in the latter. I'd argue they could take it a step further—AI is often writing code without direct feedback on the outcome of said code. The ability to see a frontend or step through a debugger on a backend would bring AI input to parity with humans, obviating human comparative advantage and giving AI an easier problem to solve. Allowing system-wide shell access can provide additional benefits, automating painful developer environment setup.

Many of AIs abilities are emergent—we don't really know what models are capable of until they are tested. Developers are beginning to realize that higher-entropy I/O channels expose previously-undiscovered abilities.

As progress continues, we will give AI increasing control as it gives us increasing value, tolerating the rising risks. I believe AI will solve interfaces, making every technology easy to use. In the process, we may embed AI beyond the point in which it can be reliably removed.

Claude tops evals in tool use. In my experience, I find it to call tools more often than other models, which I generally find desirable. Note also the performance on SWE-bench Verified, a perfect extrapolation of the graph in the previous section.

On 4/16/1945, the U.S. detonated the first atomic bomb in the Trinity test. The most intensely-guarded national secret was duplicated by the Soviet union in four years, resulting in their first successful nuclear test on 8/29/1949.

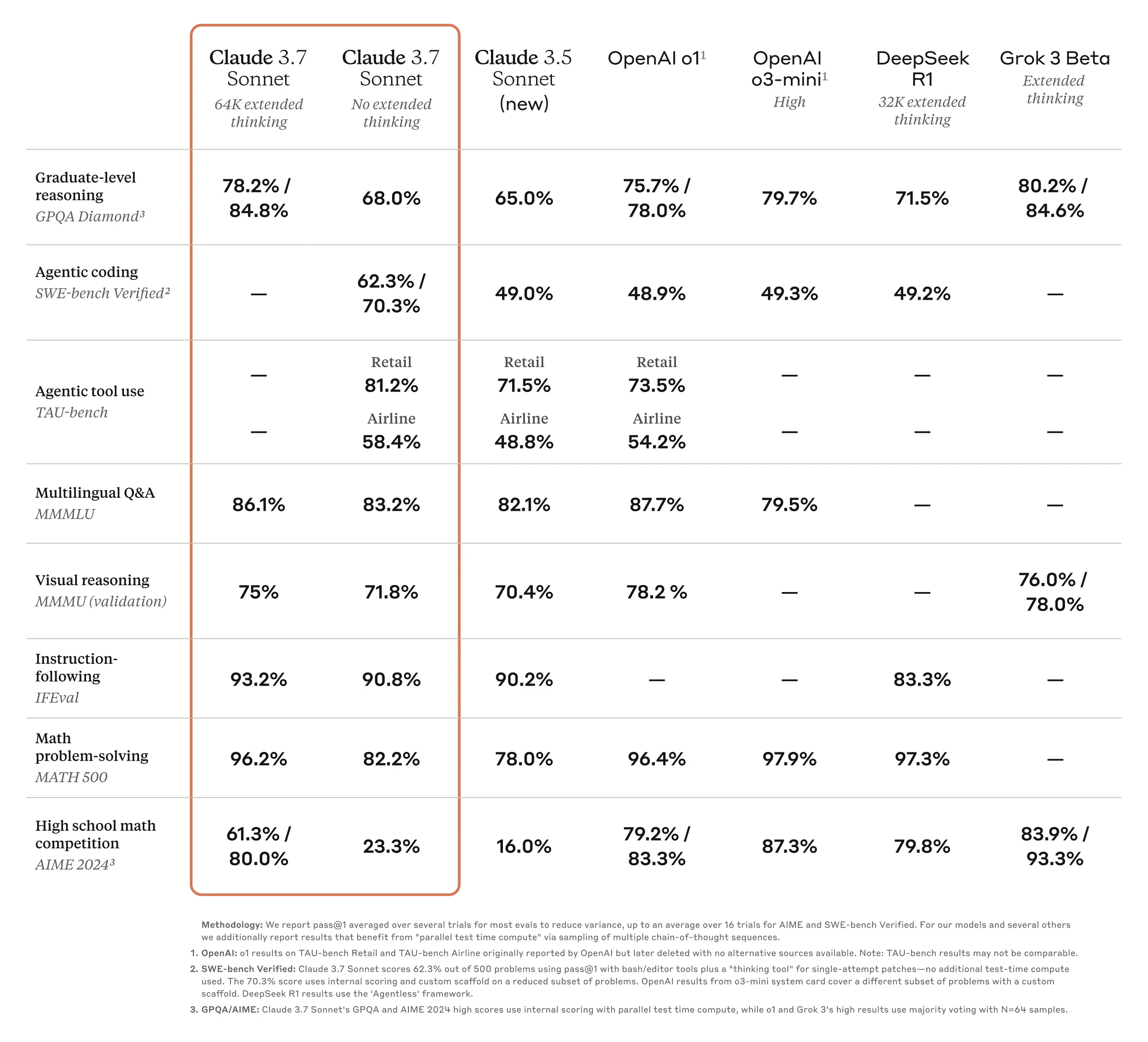

The competitiveness of the AI race.

In conclusion, everything will change. Let's build.

The amp code homescreen as of 4/10/25. Amp is an AI-powered coding tool.